I chose to reflect on a technology a classmate of mine covered, which was the history of the Google search engine. A link to their blog post can be found here. I chose to reflect on this technology because I used Google's search engine a few times in my life, especially Google Scholar.

When I was reviewing the presentation about Google's search engine, there were quite a few things I did not know about Google. For example, I did not know about its origins and the use of "spiders". I only knew about what the search engines used to look like, the amount of products they have produced, both dead and alive, and the doodles they do!

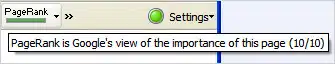

What would become the Google search engine was created in 1995 by Larry Page and Sergey Brin, two students attending Stanford University. They created a program called BackRub, which used an algorithm called PageRank.

This algorithm works by counting how many times a link to a website is on any given webpage, which is counted as a "vote". Websites that are "higher quality" have their "votes" weighed more heavily, which impacts the website's rank.

Since Google's release, a variety of search engines released, such as Brave, DuckDuckGo, and Ecosia. but what about search engines that came before Google?

A list of former search engines is listed here, but Aliweb and WebCrawler catch my eye.

Aliweb allows users to write or submit index files to their server to get their servers on the search engine. However, the difficulty of this process affected their popularity and the size of their database.

WebCrawler was a search program capable of indexing every word on a webpage, which was used to bring search results to the user. This was done thanks to a robot with the same name indexing every word on the page.

So far, I have learned about web crawlers and the impact they have had on search engines, especially with WebCrawler, so it was news to me when I learned web crawlers and spiders are the same thing!

These spiders are used to index websites to a search engine's database and store copies of websites in databases for faster loading. There is also a robot.txt file that tells web crawlers which web pages do not need to be indexed. This file can also stop these web crawlers from taking up too many resources, which causes the search engine to slow down.

Search engines have become an influential part of our lives, regardless of what web browser you are using. I did not know about the importance of web crawlers until I reflected on my peer's blog post about Google. With around 1.88 billion websites in the world today, I now know how the World Wide Web got its name.

No comments:

Post a Comment